“Resource Request” and a “Resource Limit” when defining how many resources a container within a pod should receive.

Containerising applications and running them on Kubernetes doesn’t mean we can forget all about resource utilization. Our thought process may have changed because we can much more easily scale-out our application as demand increases, but many times we need to consider how our containers might fight with each other for resources. Resource Requests and Limits can be used to help stop the “noisy neighbour” problem in a Kubernetes Cluster.

To put things simply, a resource request specifies the minimum amount of resources a container needs to successfully run. Thought of in another way, this is a guarantee from Kubernetes that you’ll always have this amount of either CPU or Memory allocated to the container.

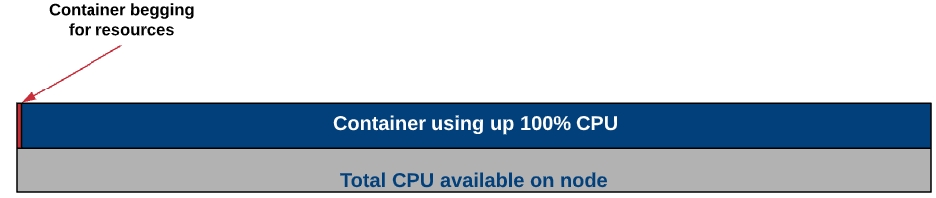

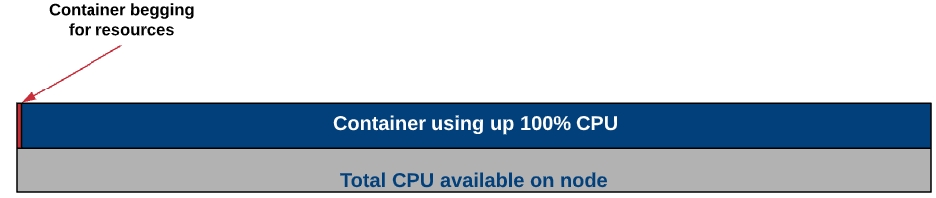

Why would you worry about the minimum amount of resources guaranteed to a pod? Well, its to help prevent one container from using up all the node’s resources and starving the other containers from CPU or memory. For instance, if I had two containers on a node, one container could request 100% of that nodes processor. Meanwhile, the other container would likely not be working very well because the processor is being monopolized by its “noisy neighbour”.

What a resource request can do, is to ensure that at least a small part of that processor’s time is reserved for both containers. This way if there is resource contention, each pod will have a guaranteed, minimum amount of resource in which to still function.

As you might guess, a resource limit is the maximum amount of CPU or memory that can be used by a container. The limit represents the upper bounds of how much CPU or memory that a container within a pod can consume in a Kubernetes cluster, regardless of whether or not the cluster is under resource contention.

Limits prevent containers from taking up more resources on the cluster than you’re willing to let them.

As a general rule, all containers should have a request for memory and CPU before deploying to a cluster. This will ensure that if resources are running low, your container can still do the minimum amount of work to stay in a healthy state until those resource free up again (hopefully).

Limits are often used in conjunction with requests to create a “guaranteed pod”. This is where the request and limit are set to the same value. In that situation, the container will always have the same amount of CPU available to it, no more or less.

At this point, you may be thinking about adding a high “request” value to make sure you have plenty of resources available for your container. This might sound like a good idea, but have dramatic consequences to scheduling on the Kubernetes cluster. If you set a high CPU request, for example, 2 CPUs, then your pod will ONLY be able to be scheduled on Kubernetes nodes that have 2 full CPUs available that aren’t reserved by other pods’ requests. In the example below, the 2 vCPU pods couldn’t be scheduled on the cluster. However, if you were to lower the “request” amount to say 1 vCPU, it could.

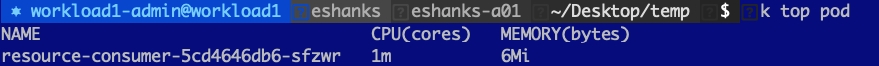

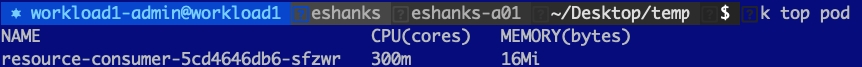

Let us try out using a CPU limit on a pod and see what happens when we try to request more CPU than we’re allowed to have. Before we set the limit though, let us look at a pod with a single container under normal conditions. I’ve deployed a resource consumer container in my cluster and by default, you can see that I am using 1m CPU(cores) and 6 Mi(bytes) of memory.

NOTE: CPU is measured in millicores so 1000m = 1 CPU core. Memory is measured in Megabytes.

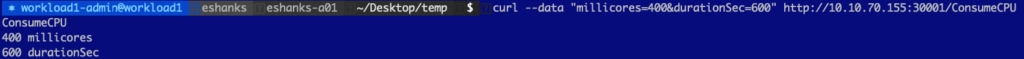

Ok, now that we have seen the “no-load” state, let us add some CPU load by making a request to the pod. Here, I’ve increased the CPU usage on the container to 400 millicores.

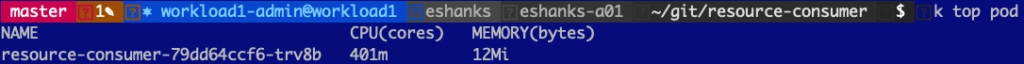

After the metrics start coming in, you can see that I’ve got roughly 400m used on the container as you’d expect to see.

Now I’ve deleted the container and we’ll edit the deployment manifest so that it has a limit on CPU.

In the container resources section, we’ve set a limit on CPU to 300m. Let us re-deploy this yaml manifest and then again increase our resource usage to 400m.

After redeploying the container and again increasing my CPU load to 400m, we can see that the container is throttled to 300m instead. I’ve effectively “limited” the resources the container could consume from the cluster.

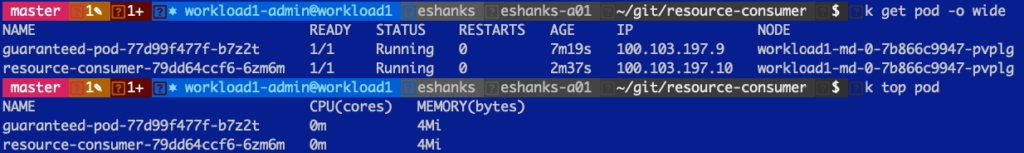

OK, next, I’ve deployed two pods into my Kubernetes cluster and those pods are on the same worker node for a simple example about contention. I’ve got a guaranteed pod that has 1000m CPU set as a limit but also as a request. The other pod is unbounded, meaning there is no limit on how much CPU it can utilize.

After the deployment, each pod is really not using any resources as you can see here.

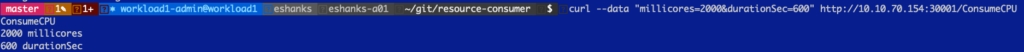

We make a request to increase the load on my non-guaranteed pod.

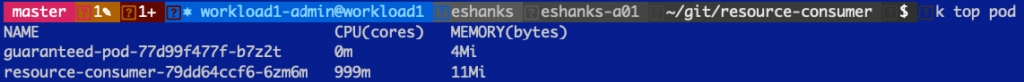

And if we look at the container's resources you can see that even though my container wants to use 2000m CPU, it’s only actually using 1000m CPU. The reason for this is because the guaranteed pod is guaranteed 1000m CPU, whether it is actively using that CPU or not.

Kubernetes uses Resource Requests to set a minimum amount of resources for a given container so that it can be used if it needs it. You can also set a Resource Limit to set the maximum amount of resources a pod can utilize.

Taking these two concepts and using them together can ensure that your critical pods always have the resources that they need to stay healthy. They can also be configured to take advantage of shared resources within the cluster.

Be careful setting resource requests too high so your Kubernetes scheduler can still scheduler these pods. Good luck!

Since there are many DIGIT services and the development code is part of various git repos, you need to understand the concept of cicd-as-service which is open sourced. This page also guides you through the process of creating a CI/CD pipeline.

As a developer - To integrate any new service/app to the CI/CD below is the starting point:

Once the desired service is ready for the integration: decide the service name, type of service, whether DB migration is required or not. While you commit the source code of the service to the git repository, the following file should be added with the relevant details which are mentioned as below:

Build-config.yml –It is present under build directory in each repository

This file contains the below details which are used for creating the automated Jenkins pipeline job for your newly created service.

While integrating a new service/app, the above content needs to be added in the build-config.yml file of that app repository. For example: If we are on-boarding a new service called egov-test, then the build-config.yml should be added as mentioned below.

If a job requires multiple images to be created (DB Migration) then it should be added as below,

Note - If a new repository is created then the build-config.yml should be created under the build folder and then the config values are added to it.

The git repository URL is then added to the Job Builder parameters

When the Jenkins Job => job builder is executed the CI Pipeline gets created automatically based on the above details in build-config.yml. Eg: egov-test job will be created under core-services folder in Jenkins because the “build-config was edited under core-services” And it should be the “master” branch only. Once the pipeline job is created, it can be executed for any feature branch with build parameters (Specifying which branch to be built – master or any feature branch).

As a result of the pipeline execution, the respective app/service docker image will be built and pushed to the Docker repository.

The Jenkins CI pipeline is configured and managed 'as code'.

New Service Integration - Example URL - https://builds.digit.org/

Job Builder – Job Builder is a Generic Jenkins job which creates the Jenkins pipeline automatically which are then used to build the application, create the docker image of it and push the image to docker repository. The Job Builder job requires the git repository URL as a parameter. It clones the respective git repository and reads the build/build-config.yml file for each git repository and uses it to create the service build job.

Check git repository URL is available in ci.yaml****

If git repository URL is available build the Job-Builder Job

If git repository URL is not available ask the devops team to add.

The services deployed and managed on a Kubernetes cluster in cloud platforms like AWS, Azure, GCP, OpenStack, etc. Here, we use helm charts to manage and generate the Kubernetes manifest files and use them for further deployment to respective Kubernetes cluster. Each service is created as charts which will have the below-mentioned files in it.

To deploy a new service, we need to create the helm chart for it. The chart should be created under the charts/helm directory in DIGIT-DevOps repository.

We have an automatic helm chart generator utility which needs to be installed on the local machine, the utility will prompt for user inputs about the newly developed service( app specifications) for creating the helm chart. The requested chart with the configuration values (created based on the inputs provided) will be created for the user.

Name of the service? test-service Application Type? NA Kubernetes health checks to be enabled? Yes Flyway DB migration container necessary? No Expose service to the internet? Yes Route through API gateway [zuul] No Context path? hello

The generated chart will have the following files.

This chart can also be modified further based on user requirements.

The Deployment of manifests to the Kubernetes cluster is made very simple and easy. We have Jenkins Jobs for each state and environment-specific. We need to provide the image name or the service name in the respective Jenkins deployment job.

Enter a caption for this image (optional)

Enter a caption for this image (optional)

The deployment Jenkins job internally performs the following operations,

Reads the image name or the service name given and finds the chart that is specific to it.

Generates the Kubernetes manifests files from the chart using helm template engine.

Execute the deployment manifest with the specified docker image(s) to the Kubernetes cluster.

Overview of various probes that we can setup to ensure the service deployment and the availability of the service is ensured automatically.

Determining the state of a service based on readiness, liveness, and startup to detect and deal with unhealthy situations. It may happen that if the application needs to initialize some state, make database connections, or load data before handling application logic. This gap in time between when the application is actually ready versus when Kubernetes thinks is ready becomes an issue when the deployment begins to scale and unready applications receive traffic and send back 500 errors.

Many developers assume that when basic pod setup is adequate, especially when the application inside the pod is configured with daemon process managers (e.g. PM2 for Node.js). However, since Kubernetes deems a pod as healthy and ready for requests as soon as all the containers start, the application may receive traffic before it is actually ready.

Kubernetes supports readiness and liveness probes for versions ≤ 1.15. Startup probes were added in 1.16 as an alpha feature and graduated to beta in 1.18 (WARNING: 1.16 deprecated several Kubernetes APIs. Use this migration guide to check for compatibility).

All the probe have the following parameters:

initialDelaySeconds : number of seconds to wait before initiating liveness or readiness probes

periodSeconds: how often to check the probe

timeoutSeconds: number of seconds before marking the probe as timing out (failing the health check)

successThreshold : minimum number of consecutive successful checks for the probe to pass

failureThreshold : number of retries before marking the probe as failed. For liveness probes, this will lead to the pod restarting. For readiness probes, this will mark the pod as unready.

Readiness probes are used to let kubelet know when the application is ready to accept new traffic. If the application needs some time to initialize state after the process has started, configure the readiness probe to tell Kubernetes to wait before sending new traffic. A primary use case for readiness probes is directing traffic to deployments behind a service.

One important thing to note with readiness probes is that it runs during the pod’s entire lifecycle. This means that readiness probes will run not only at startup but repeatedly throughout as long as the pod is running. This is to deal with situations where the application is temporarily unavailable (i.e. loading large data, waiting on external connections). In this case, we don’t want to necessarily kill the application but wait for it to recover. Readiness probes are used to detect this scenario and not send traffic to these pods until it passes the readiness check again.

On the other hand, liveness probes are used to restart unhealthy containers. The kubelet periodically pings the liveness probe, determines the health, and kills the pod if it fails the liveness check. Liveness checks can help the application recover from a deadlock situation. Without liveness checks, Kubernetes deems a deadlocked pod healthy since the underlying process continues to run from Kubernetes’s perspective. By configuring the liveness probe, the kubelet can detect that the application is in a bad state and restarts the pod to restore availability.

Startup probes are similar to readiness probes but only executed at startup. They are optimized for slow starting containers or applications with unpredictable initialization processes. With readiness probes, we can configure the initialDelaySeconds to determine how long to wait before probing for readiness. Now consider an application where it occasionally needs to download large amounts of data or do an expensive operation at the start of the process. Since initialDelaySeconds is a static number, we are forced to always take the worst-case scenario (or extend the failureThresholdthat may affect long-running behaviour) and wait for a long time even when that application does not need to carry out long-running initialization steps. With startup probes, we can instead configure failureThreshold and periodSeconds to model this uncertainty better. For example, setting failureThreshold to 15 and periodSeconds to 5 means the application will get 10 x 5 = 75s to startup before it fails.

Now that we understand the different types of probes, we can examine the three different ways to configure each probe.

The kubelet sends an HTTP GET request to an endpoint and checks for a 2xx or 3xx response. You can reuse an existing HTTP endpoint or set up a lightweight HTTP server for probing purposes (e.g. an Express server with /healthz endpoint).

HTTP probes take in additional parameters:

host : hostname to connect to (default: pod’s IP)

scheme : HTTP (default) or HTTPS

path : path on the HTTP/S server

httpHeaders : custom headers if you need header values for authentication, CORS settings, etc

port : name or number of the port to access the server

If you just need to check whether or not a TCP connection can be made, you can specify a TCP probe. The pod is marked healthy if can establish a TCP connection. Using a TCP probe may be useful for a gRPC or FTP server where HTTP calls may not be suitable.

Finally, a probe can be configured to run a shell command. The check passes if the command returns with exit code 0; otherwise, the pod is marked as unhealthy. This type of probe may be useful if it is not desirable to expose an HTTP server/port or if it is easier to check initialization steps via command (e.g. check if a configuration file has been created, run a CLI command).

The exact parameters for the probes depend on your application, but here are some general best practices to get started:

For older (≤ 1.15) Kubernetes clusters, use a readiness probe with an initial delay to deal with the container startup phase (use p99 times for this). But make this check lightweight, since the readiness probe will execute throughout the entire lifecycle of the pod. We don’t want the probe to timeout because the readiness check takes a long time to compute.

For newer (≥ 1.16) Kubernetes clusters, use a startup probe for applications with unpredictable or variable startup times. The startup probe may share the same endpoint (e.g. /healthz ) as the readiness and liveness probes, but set the failureThreshold higher than the other probes to account for longer start times, but more reasonable time to failure for liveness and readiness checks.

Readiness and liveness probes may share the same endpoint if the readiness probes aren’t used for other signalling purposes. If there’s only one pod (i.e. using a Vertical Pod Autoscaler), set the readiness probe to address the startup behaviour and use the liveness probe to determine health. In this case, marking the pod unhealthy means downtime.

Readiness checks can be used in various ways to signal system degradation. For example, if the application loses connection to the database, readiness probes may be used to temporarily block new requests and allow the system to reconnect. It can also be used to load balance work to other pods by marking busy pods as not ready.

In short, well-defined probes generally lead to better resilience and availability. Be sure to observe the startup times and system behaviour to tweak the probe settings as the applications change.

Finally, given the importance of Kubernetes probes, you can use a Kubernetes resource analysis tool to detect missing probes. These tools can be run against existing clusters or be baked into the CI/CD process to automatically reject workloads without properly configured resources.

Polaris: a resource analysis tool with a nice dashboard that can also be used as a validating webhook or CLI tool.

Kube-score: a static code analysis tool that works with Helm, Kustomize, and standard YAML files.

Popeye: read-only utility tool that scans Kubernetes clusters and reports potential issues with configurations.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

DIGIT being a containers based platform and orchestrated on kubernetes, let's discuss about some key security practices to protect the infrastructure.

Security is always a difficult subject to approach either by the lack of experience; either by the fact you should know when the level of security is right for what you have to secure.

Security is a major concern when it comes to government systems and infra. As an architect, we can consider that working with technically educated people (engineers, experts) and tools (systems, frameworks, IDE) should prevent key VAPT issues.

However, it’s quite difficult to avoid, a certain infatuation from different categories of people to try to hack the systems.

There aren’t only bug fixes in each release but also new security measures to require advantage of them, we recommend working with the newest stable version.

Updates and support could also be harder than the new features offered in releases, so plan your updates a minimum of once a quarter. Significantly simplify updates can utilize the providers of managed Kubernetes-solutions.

Use RBAC (Role-Based Access Control) to regulate who can access and what rights they need. Usually, RBAC is enabled by default in Kubernetes version 1.6 and later (or later for a few providers), but if you’ve got been updated since then and didn’t change the configuration, you ought to double-check your settings.

However, enabling RBAC isn’t enough — it still must be used effectively. within the general case, the rights to the whole cluster (cluster-wide) should be avoided, giving preference to rights in certain namespaces. Avoid giving someone cluster administrator privileges even for debugging — it’s much safer to grant rights only necessary and from time to time.

If the appliance requires access to the Kubernetes API, create separate service accounts. and provides them with the minimum set of rights required for every use case. This approach is far better than giving an excessive amount of privilege to the default account within the namespace.

Creating separate namespaces is vital because of the first level of component isolation. it’s much easier to regulate security settings — for instance, network policies — when different types of workloads are deployed in separate namespaces.

To get in-depth knowledge on Kubernetes, enrol a love demo on Kubernetes course

A good practice to limit the potential consequences of compromise is to run workloads with sensitive data on a fanatical set of machines. This approach reduces the risk of a less secure application accessing the application with sensitive data running in the same container executable environment or on the same host.

For example, a kubelet of a compromised node usually has access to the contents of secrets only if they are mounted on pods that are scheduled to be executed on the same node. If important secrets are often found on multiple cluster nodes, the attacker will have more opportunities to urge them.

Separation can be done using node pools (in the cloud or for on-premises), as well as Kubernetes controlling mechanisms, such as namespaces, taints, tolerations, and others.

Sensitive metadata — for instance, kubelet administrative credentials, are often stolen or used with malicious intent to escalate privileges during a cluster. For example, a recent find within Shopify’s bug bounty showed in detail how a user could exceed authority by receiving metadata from a cloud provider using specially generated data for one of the microservices.

The GKE metadata concealment function changes the mechanism for deploying the cluster in such how that avoids such a drag. And we recommend using it until a permanent solution is implemented.

Network Policies — allow you to control access to the network in and out of containerized applications. To use them, you must have a network provider with support for such a resource. For managed Kubernetes solution providers such as Google Kubernetes Engine (GKE), support will need to be enabled.

Once everything is ready, start with simple default network policies — for example, blocking (by default) traffic from other namespaces.

Pod Security Policy sets the default values used to start workloads in the cluster. Consider defining a policy and enabling the Pod Security Policy admission controller: the instructions for these steps vary depending on the cloud provider or deployment model used.

In the beginning, you might want to disable the NET_RAW capability in containers to protect yourself from certain types of spoofing attacks.

To improve host security, you can follow these steps:

Ensure that the host is securely and correctly configured. One way is CIS Benchmarks; Many products have an auto checker that automatically checks the system for compliance with these standards.

Monitor the network availability of important ports. Ensure that the network is blocking access to the ports used by kubelet, including 10250 and 10255. Consider restricting access to the Kubernetes API server — with the exception of trusted networks. In clusters that did not require authentication and authorization in the kubelet API, attackers used to access to such ports to launch cryptocurrency miners.

Minimize administrative access to Kubernetes hosts Access to cluster nodes should in principle be limited: for debugging and solving other problems, as a rule, you can do without direct access to the node.

Make sure that audit logs are enabled and that you are monitoring for the occurrence of unusual or unwanted API calls in them, especially in the context of any authorization failures — such entries will have a message with the “Forbidden” status. Authorization failures can mean that an attacker is trying to take advantage of the credentials obtained.

Managed solution providers (including GKE) provide access to this data in their interfaces and can help you set up notifications in case of authorization failures.

Follow these guidelines for a more secure Kubernetes cluster. Remember that even after the cluster is configured securely, you need to ensure security in other aspects of the configuration and operation of containers. To improve the security of the technology stack, study the tools that provide a central system for managing deployed containers, constantly monitoring and protecting containers and cloud-native applications.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

This section contains a list of documents elaborating on the key concepts aiding the deployment of the DIGIT platform.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.